Private Link is the IP filtering of the cloud

Use cases for Private Link and differences in its implementation across the major Cloud Providers.

Changelog

- November 27, 2024: Updated to reflect AWS PrivateLink’s new cross-region connectivity support. This feature brings AWS PrivateLink’s capabilities more in line with Azure and GCP’s offerings.

- January 30, 2022: Original post published.

Private Link #

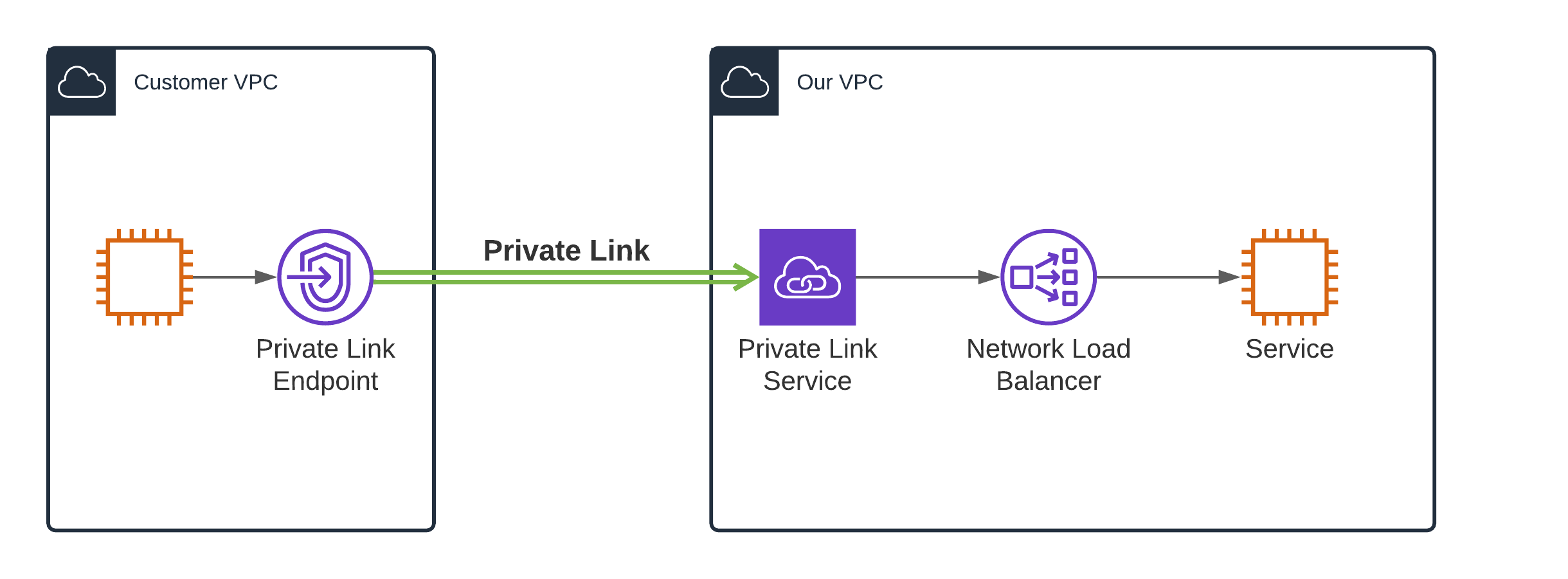

Private Link allows secure connectivity to services hosted on different Cloud provider accounts.

- Private Link allows you to connect to a service in a different VPC—and that VPC can be owned by a different account.

- The connection is secure1:

- It doesn’t leave the cloud provider’s network.

- Data exchanged over Private Link is encrypted (I wasn’t able to find much on the encryption in the documentation—it makes sense to run secure the connection with TLS).

- You can use native features, such as IAM and security policies, to limit who can access the connection.

- The connection is unidirectional.

- Resources in the source VPC can access a dedicated service in the target VPC.

- Other resources in the target VPC are not exposed.

- Resources in the source VPC are not exposed.

- The service provider creates a Private Link Service with a globally unique Alias.

- The consumer creates an Endpoint. It uses the Alias of the Service to identify the target. It has a static IP address and a randomly generated DNS name.

Note on terminology

- Private Link is not a standard name, it is a service from AWS. It is called AWS PrivateLink. AWS was first to offer such a service and then the name caught on. Azure calls their equivalent Azure Private Link and GCP has Private Service Connect (or PSC).

- Private Link can be also used to access cloud provider services (such as S3), and others. Here we are focused on the SaaS service provider use case.

Use case #

We run a SaaS company. We offer a simple service to send emails:

curl -XPOST -H 'Authorization: ...' https://customerA.my-saas.io/emails -d '{

"from": "alice@customerA.com",

"to": "bob@example.com"

"subject": "foo",

"body": "bar"

}'

The service is easy to use and developer-friendly. It catches on.

Security comes in layers #

Cecilia from MegaCorp, Inc. wants to use my-saas.io in her latest project. This is a big contract for us! However, she adheres to defense in depth.

The base URL of her account https://megacorp.my-saas.io is easy to guess. Even if we switch the vanity domain megacorp.my-saas.io for something more random, like db1534b8-753f-4531-b7dc-fefb3d6f53f1.my-saas.io it can leak. The host is present in the DNS queries, SNI headers, request logs, etc.

Cecilia would like us to drop any connections to https://megacorp.my-saas.io unless they come from their VPC.

Solution sketch #

PROXY protocol v2 #

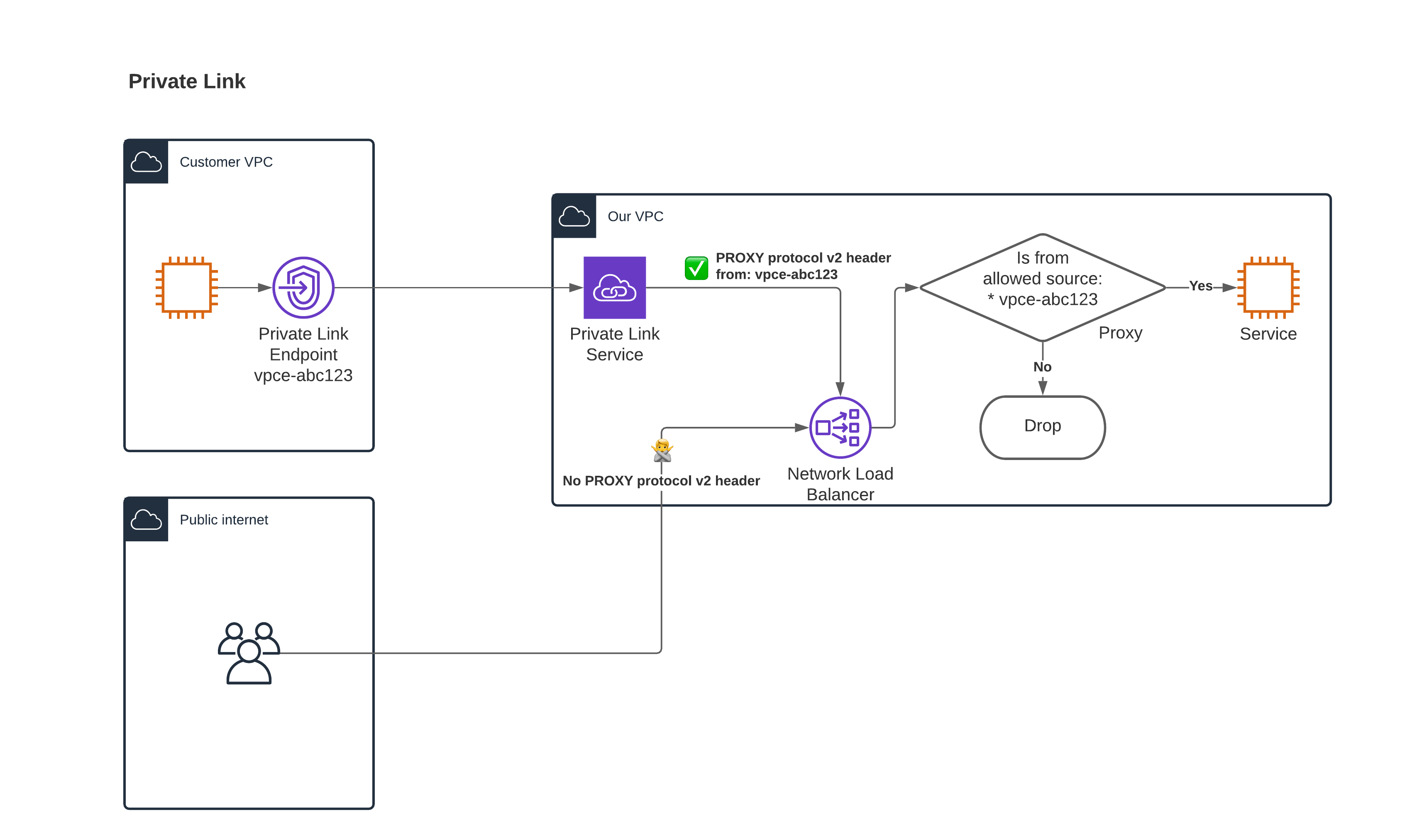

How can we attribute connections to specific customers? All the connections come from the same IP address (that one of the Private Link Service).

PROXY protocol v2 (or PP2 for short) to the rescue. Cloud providers allow us to enable PP2 on the service. Each connection will be annotated with extra attributes:

- Source IP address of the connection.

- Note that it is in the source VPC private address space, this is not useful for traffic filtering.

- VPC Endpoint ID. This is a unique identifier of the customer’s VPC Endpoint. Different cloud providers use a different name for it, we will call it a link ID in the post.

What’s a PP2? It’s a protocol popularized by the HAProxy. Quoting their docs:

The PROXY protocol provides a convenient way to safely transport connection information such as a client’s address across multiple layers of NAT or TCP proxies. It is designed to require little changes to existing components and to limit the performance impact caused by the processing of the transported information.

In practice, PP2 adds a binary (but unencrypted) blob at the start of the byte stream. Since it goes at the start of the connection, it will go even before the TLS handshake. You want to consume it, and then hand the rest of the byte stream over to the http library/application logic.

Note that PP2 is an option. By default it is disabled and you need to explicitly enable it.

Back to our solution #

- Customers fill in their link IDs.

- We add a proxy (can work at L7 or L4):

- It reads the PP2 header to understand the source of the connection.

- Public internet connections have no link IDs and can be processed the same way.

- It reads the SNI header to understand the target resource.

- If the target resource set up a link ID, then it compares if the source link ID matches:

- Match ==> Accept the connection and route it to the target.

- No match ==> Drop the connection.

- It reads the PP2 header to understand the source of the connection.

All connections to customer resources are dropped, except if they originate from their configured VPC.

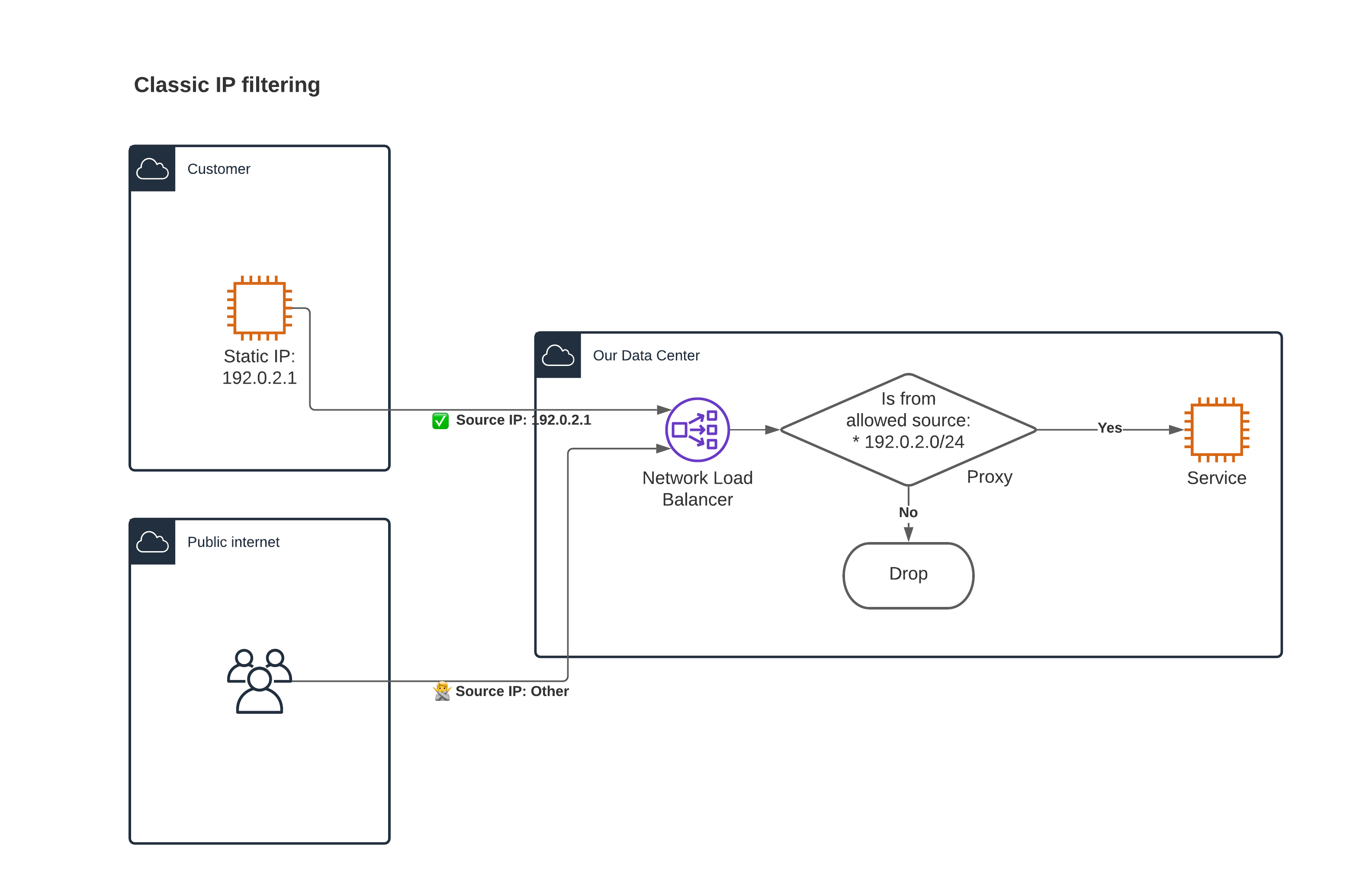

Similarities with the classic IP filtering #

Conceptually, IP filtering is a similar solution. It is simpler, as source IPs are part of the TCP/UDP connections, no need to involve PP2. The connections are direct, with no extra Services or Endpoints.

In the cloud this is not feasible, there are no static IPs on either side:

- We have managed load balancers in front of your infra and you don’t control their IPs. We (or our cloud provider) autoscale them adding/removing new IP addresses.

- Customers often don’t have static IPs for their egress traffic.

Alternative solution: VPC peering #

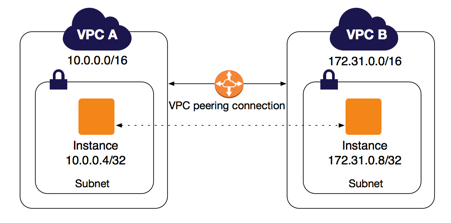

VPC peering allows two VPCs to share network address space—similarly to how you may use a switch to join two network segments.

Diagram from https://docs.aws.amazon.com/vpc/latest/peering/what-is-vpc-peering.html

This sounds like a good idea, but has a couple of drawbacks for our SaaS use case:

- The private IPs between the VPCs can’t overlap, which means you need to coordinate the address space with all your customers.

- The access is bidirectional as if the resources were in the same network.

- You can and should set up access-control rules, but an error in them may result in your customers being able to access your infrastructure and/or you being able to access your customers’ infrastructure.

Security considerations #

Can you trust the PP2 header? Can it be spoofed?

The scenario we need to watch out for is someone adding their own PP2 header, and therefore spoofing the connection source.

The Private Link traffic has the PP2 headers always added (if we enable it). Even if the user adds their PP2 header in front of the connection, the cloud provider will add another one in front of that. We can parse only the first one, and be sure that it comes from a trusted third party.

The public internet traffic is trickier. As far as I know, only AWS allows adding PP2 headers to public internet traffic2.

- AWS: The same NLB can be set up to receive public internet traffic and Private Link Service traffic. All the connections made through this NLB will have a PP2 header added by a trusted third party.

- Azure: We need a different LB for public internet traffic3. The traffic from the public internet doesn’t carry PP2 headers. You should configure your proxy in such a way that it trusts the PP2 headers from the Private Link LB and ignores any headers from the public internet LB.

- GCP: Similarly to Azure, the traffic from the public internet doesn’t get GCP-added PP2 headers.

Differences between cloud providers #

| AWS | Azure | GCP | |

|---|---|---|---|

| Name | AWS PrivateLink | Azure Private Link | GCP Private Service Connect |

| Allows cross-region connections? | Yes, as of November 2024 available in major regions | Yes, endpoint and service can be in different regions | Yes, endpoint and service need to be in the same region, but you can connect to the endpoint from other regions with Global Access |

| PP2 header format | Link | Link | Link |

| Can share LB with public internet traffic? | Yes | No, see below | No, a dedicated forwarding rule required |

| Learn more | PrivateLink product page | Private Link overview and docs | Private Service Connect guide |

If you want to parse the PP2 headers in go—or compare notes on implementation—check tlvparse package in pires/go-proxyproto. A go library which supports all of them.

Quirks #

At least one overlapping AZs is required in AWS #

Both the source and the target VPCs need at least one overlapping Availability Zone to form the Private Link connection.

Check the limitations section of the docs, esp.

When the service provider and the consumer have different accounts and use multiple Availability Zones, and the consumer views the VPC endpoint service information, the response only includes the common Availability Zones. For example, when the service provider account uses

us-east-1aandus-east-1cand the consumer usesus-east-1aandus-east-1b, the response includes the VPC endpoint services in the common Availability Zone, us-east-1a.

Note that the letters describing the AZs (e.g., a in us-east-1a) are randomized between the accounts. AWS internally uses numbers to represent the AZs and randomize the number => letter mapping per account4.

You can check your AZ mapping with the CLI:

$ aws ec2 describe-availability-zones --region us-east-1 | jq -c '.AvailabilityZones[] | { id: .ZoneId, name: .ZoneName } ' | sort

{"id":"use1-az1","name":"us-east-1c"}

{"id":"use1-az2","name":"us-east-1e"}

{"id":"use1-az3","name":"us-east-1d"}

{"id":"use1-az4","name":"us-east-1a"}

{"id":"use1-az5","name":"us-east-1f"}

{"id":"use1-az6","name":"us-east-1b"}

Azure requires different LBs #

You need a separate LB to handle Private Link traffic and public internet traffic. As soon as you attach Private Link Service to your LB it stops serving public internet traffic. At least this is what I’ve found in my tests, as far as I know, this limitation is not documented.

Create two different LBs and test the configuration in a pre-prod account before changing the production configuration.

DNS support #

How do customers access our service over Private Link? The endpoints get a static IP (from the source IP subnet) and a DNS alias.

curl -XPOST -H 'Authorization: ...' https://10.0.10.63/emails -d '{ # How do we pass the domain?

…

}'

The problem is that 10.0.10.63 (or a random DNS alias) won’t match our TLS certs for *.my-saas.io.

This means that our Private Link customers need to manually create DNS records pointing from 10.0.10.63 => *.my-saas.io"

curl -XPOST -H 'Authorization: ...' https://megacorp.my-saas.io/emails -d '{

…

}'

How do they know that everything is configured correctly? If they forgot the DNS record the megacorp.my-saas.io will connect to their resources over the public internet. This will likely result in a connection drop, which should hint at the problem.

Another suggestion would be to use a different domain—unresolvable from the public internet—for the Private Link traffic.

Private DNS integration #

The cloud providers offer automatic DNS integration. You can configure your Private Link Service, that any endpoint connecting to it will automatically add my-saas.io (or other domain) to the private DNS of its VPC5.

None of the providers support wildcard domains. In our example, we use the domain to differentiate between customers, but if we can use a different mechanism, e.g., http header, or part of the request, then we can simplify the Private Link setup for our customers using the Private DNS integration.

curl -XPOST -H 'Authorization: ...' https://my-saas.io/emails -d '{

"account": "megacorp",

…

}'

Summary #

We’ve learned about Private Link and how it can act as an IP filtering for the Cloud. We’ve presented a fictional SaaS company, and solved their use case with Private Link. We’ve discussed security considerations, differences in implementation, and quirks of different cloud providers.

Resources #

See the Learn more section in the table above.

Elastic Cloud announces their support for Private Link (disclaimer: I work for Elastic when I write this; I’ve implemented a large part of the backend support for Elastic Cloud Private Link). This is an example of how Private Link is useful for SaaS providers. More examples:

See Proxy protocol. ↩︎

I haven’t seen this documented anywhere, but as soon as we associate a private link service with an Azure Load Balancer, the public internet traffic stops flowing. This is worth a separate investigation and a blog post. ↩︎

Learn more VPC Endpoint Private DNS. ↩︎